← Back to Projects

SemNR

Mentored by: Applied Materials

A complete classical + deep-learning denoising system for SEM images

Python

PyTorch

U-Net

FastAPI

MinIO

SQLite

PyQt6

OpenCV

Docker

Description

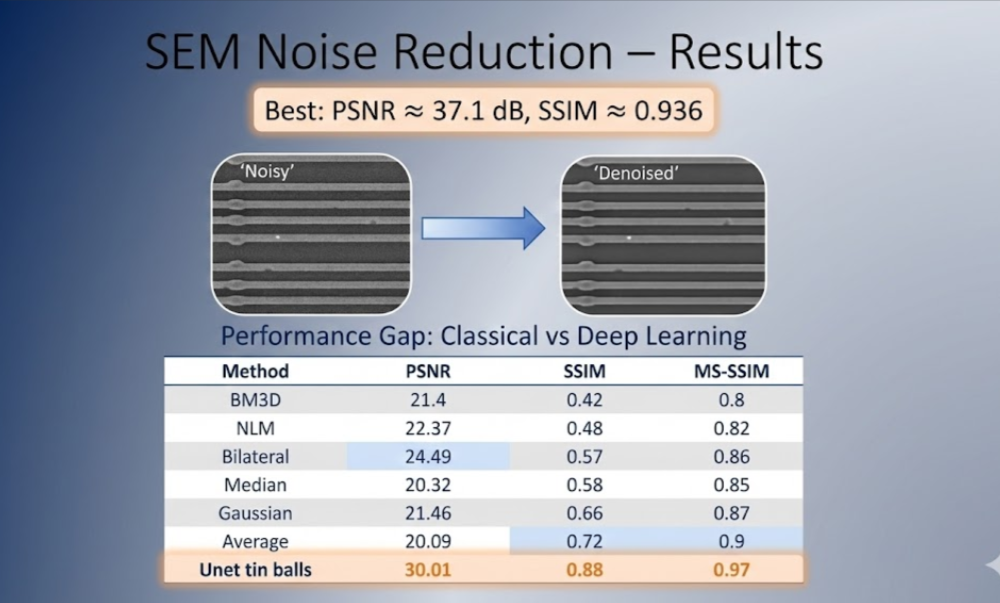

A full SEM denoising workflow integrating classical filters (BM3D, Gaussian, Bilateral, NLM) with deep-learning U-Net models for Noise→Clean training. Includes custom datasets (Tin-balls real pairs, synthetic wafer images), noise simulation pipeline, multi-client FastAPI backend, PyQt6 GUI, per-stage PSNR/SSIM metrics, MinIO-based dataset consistency, pause/resume pipeline, and concurrent multi-model processing.

Team Members

Cohort: Data Science Bootcamp 2025 (Data)

Responsibilities:

Developed a complete noise-reduction pipeline for SEM images, including preprocessing, structured model execution, and unified evaluation.

Implemented classical denoising baselines—Gaussian, Bilateral, Non-Local Means, and BM3D—within an extensible benchmarking framework.

Trained U-Net denoising models in Python/PyTorch on custom SEM datasets with optimized augmentation and hyperparameters.

Designed and deployed a FastAPI + Docker backend exposing REST endpoints for image upload, model selection, and result retrieval.

Integrated PostgreSQL and MinIO for storing images, benchmarks, and inference results, using Git for version control and team collaboration.

Built an automated evaluation suite computing PSNR and SSIM metrics and generating consistent comparisons across classical and deep-learning models.

Created a PyQt desktop client supporting model execution, visualization of every pipeline stage, and interactive comparison between methods.

Research: Dataset analysis and selection — evaluated multiple SEM datasets, created synthetic noisy samples, and studied which data sources yield the most effective denoising performance.

Research: Performance optimization with parallel execution of multiple models; benchmarking showed limited benefit for production use.

Implemented multi-client support, MinIO-based benchmark selection, and improved visualization features inspired by open issues, enhancing usability and scalability of the system.

...and more contributions not listed here

Responsibilities:

Research and plan SEM image denoising methods (classical & deep learning)

Benchmark multiprocessing performance for image inference

Analyze thresholds for parallel execution & implement conditional parallelism

Implement Stop/Resume and Incremental Evaluation features

Update Model Table in GUI for improved user interaction

Investigate theory and applicability of chosen algorithm

Update GUI according to approved presentation designs

Design and plan GUI layout and visual style

Create Python function timing decorator with logging

Add option to upload entire folders of images

...and more contributions not listed here

Responsibilities:

Developing Noise Reduction algorithm and framework for evaluation.

Implemented classical denoising baselines (Gaussian, Bilateral, etc.) for benchmarking.

Trained U-Net–based deep learning models in Python/PyTorch on custom datasets.

Conducted architecture experiments and systematic hyperparameter tuning (loss functions, LR)

Built an evaluation framework using PSNR, SSIM, and custom metrics.

Developed a FastAPI + Docker backend with PostgreSQL/MinIO storage.

Created a PyQt desktop client for visual and metric-based comparison of all methods.

...and more contributions not listed here